Clearing up common misconceptions about algorithmic recommendations on streaming platforms.

Algorithmic recommendation systems have become the dominant mode of music discovery — and a central pillar of modern music marketing. Yet artists and their teams often struggle to understand why some tracks receive algorithmic support while others fail to find their footing. This confusion tends to surface in a familiar form: why do some releases end up in Discover Weekly, Release Radar, or radio autoplay, while others do not?

For many artists and music professionals, this lack of response becomes the first major pain point when attempting to increase their algorithmic visibility. It leaves them searching for answers about what “the algorithm” actually responds to — and what, if anything, can be done to improve a track’s discoverability on Spotify and other streaming platforms.

A quick Google search yields no shortage of confident answers. Blog posts, YouTube explainers, and marketing guides promise clarity, often boiling complex systems down to surface-level metrics, anecdotal thresholds, or one-size-fits-all promotional tactics. Yet much of this advice remains misguided — and for many artists and their teams, following these metric-chasing strategies becomes increasingly costly.

Common misconceptions about Discover Weekly, Release Radar, and algorithmic recommendation systems

In this article, we take a closer look at some of the most common misconceptions surrounding algorithmic optimization, and examine whether they hold up when viewed through the lens of how modern recommender systems actually work. For readers looking for a deeper, system-level breakdown of Spotify’s recommendation stack, we cover that foundation in detail in our complete guide to how Spotify’s recommendation system works.

“Algorithmic playlists are unlocked once you cross a popularity threshold”

A widespread belief is that Discover Weekly and similar features activate once a track reaches a certain level of traction. The idea of a hidden threshold you need to cross to “unlock” algorithmic features — whether framed around streams, listeners, or early engagement benchmarks — is intuitive, but it does not reflect how modern recommendation systems operate.

Advice such as “get your track to a few thousand streams and a strong save count within the first weeks” offers tangible, reassuring, and actionable targets. The problem is that these targets confuse volume with signal quality. In practice, recommender systems do not wait for a track to reach some predefined scale threshold. Instead, they continuously evaluate how likely a track is to resonate with a given listener, in a given listening context.

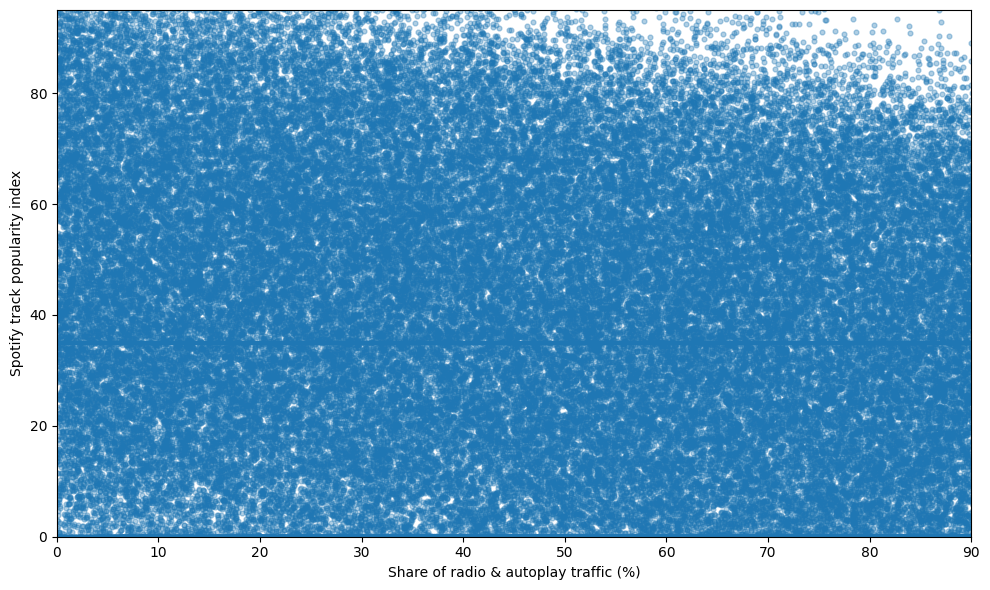

Over the past few years, Music Tomorrow has conducted a number of algorithmic audit projects, amassing a dataset covering more than 500,000 unique tracks. Across this dataset, we observed no meaningful relationship between a track’s popularity and its relative algorithmic exposure. Tracks of vastly different scale frequently received comparable algorithmic treatment, while similarly sized releases diverged sharply. What mattered was not how big the track was, but how clear and legible its early signals were within the system. Tracks that surfaced through algorithmic recommendations did so because they managed to “convince” the system — not through volume alone, but through the quality, coherence, and contextual consistency of their signals.

Source: Music Tomorrow

“There are fixed engagement benchmarks you need to hit”

Another common misconception frames algorithmic exposure as a matter of hitting the “right” engagement ratios — saves, skip rates, completion rates — as if these signals were evaluated against fixed benchmarks.

This framing is closer to how recommender systems operate than raw popularity thresholds, but it still misses a crucial point: engagement signals are always interpreted in context.

A save or skip does not carry intrinsic meaning on its own. The same behavior can be interpreted very differently depending on the listening surface, the listener’s intent in that moment, and the coherence of the audience being tested. Engagement generated by a small but well-defined listener community often teaches the system far more than engagement produced by a diffuse or poorly aligned audience.

Besides, “good engagement” itself can take very different forms depending on the user, genre, and session context. A skip or a save can translate into very different signals for the system when we compare a laid-back session on a “Smooth Jazz Mix” versus a lean-forward exploratory session on Discover Weekly or Release Radar. In the first case, consumption happens largely in the background — so the strongest positive signal may be uninterrupted listening. In the second, users are far more likely to skip between tracks and artists — making skips a much weaker indicator of dissatisfaction.

Treating engagement as a universal score to optimize for obscures the system’s underlying goal: reducing uncertainty about where and for whom a track can be recommended reliably.

“Any playlisting helps the algorithm learn faster”

Playlist placement is often presented as a shortcut to algorithmic traction, under the assumption that any increase in playlist adds helps the recommender system learn faster. In reality, playlist placements only become useful when they reinforce meaningful artist connections — when they clarify where the track “belongs,” and in which discovery contexts it is likely to perform well.

A common example is early placement on large, generalist editorial playlists such as New Music Friday. For a developing artist, this can generate a sudden spike in streams and place the track alongside some of the biggest releases of the week.

On the surface, this looks like a clear win. In practice, however, this type of exposure often leaves very little behind once the track is removed from the playlist. Joint listenership is broad and heterogeneous, listener intent is mixed, and engagement signals tend to be scattered across unrelated contexts. Rather than helping the system learn where the track belongs, this produces disjointed signals that are difficult to generalize.

The result is often a track that enjoys a moment of visibility but lacks the clarity and consistency of signals required for sustained algorithmic support. Broad or poorly aligned playlisting can generate large volumes of consumption data while adding very little structural information — increasing uncertainty rather than accelerating discovery.

On the other hand, streams from playlists that are well aligned with algorithmic targets — for example, a Dreampop playlist placement for an emerging artist within that niche — will often continue to generate meaningful downstream impact long after the track is removed.

“There is a repeatable formula to get on Discover Weekly, Release Radar, or Algorithmic Radio”

Much of the advice around algorithmic optimization ultimately rests on the promise of a repeatable recipe: follow a defined sequence of steps, hit the right metrics, and algorithmic exposure will follow.

The promise is appealing, but it reflects a static view of a system that is fundamentally adaptive. Modern recommender systems continuously adjust to genre-specific dynamics, listener intent, and evolving consumption patterns. What works for one release, audience, or moment in time does not translate cleanly to another.

Any framework that claims to offer a universal formula inevitably abstracts away the very mechanisms — context, placement, and unique artist fit — that actually determine algorithmic outcomes.

Popularity & engagement ≠ discoverability

Spotify’s recommender system does not distribute exposure as a reward for past success. At its core, it is a matching system — designed to place the right track in front of the right listener at the right moment, while minimizing the risk of dissatisfaction.

That said, popularity and engagement are not irrelevant. Consistent engagement over time often reflects genuine audience fit — and that fit is precisely what allows algorithmic exposure to grow. But surface-level popularity or engagement does not automatically translate into algorithmic potential.

At its core, every recommendation reflects a confidence-based decision: given this listener, in this context, how likely is it that this track will resonate? When a track appears in Discover Weekly, Radio, or another personalized surface, it is not because it has earned a symbolic promotion or a checkmark — it is because the system has accumulated enough consistent, context-specific evidence to make that placement with limited risk and high expected user satisfaction.

Not all consumption signals generate useful evidence. For example, an alt-R&B artist featured in genre-aligned, mood-specific playlists such as Lowkey or DND. will typically generate far clearer algorithmic feedback than the same artist appearing in a broad Viral Hits playlist. In the first case, listener intent and taste alignment reinforce the system’s understanding; in the second, audience signals are fragmented and diluted.

Streams that are well anchored — driven by listeners with aligned intents and taste profiles, within relevant listening contexts — help the system qualify a track more precisely. Broad, heterogeneous, or poorly contextualized exposure may increase raw stream counts, but often teaches the system very little about how and where a track can be recommended with confidence.

This is why visibility alone is a weak predictor of algorithmic success. Over time, Spotify’s recommender has evolved from relatively simple collaborative filtering into a layered system built on rich track and user representations — derived from audio, metadata, playlists, listening sessions, and semantic context — and applied across multiple recommendation surfaces optimized for different listening intents. Tracks are not evaluated as isolated assets, but as nodes embedded within a dense relational graph encoding sound, context, usage, and cultural proximity. Within such a system, scale and traction are outcomes of effective positioning, audience response, and discoverability — not prerequisites for it.

Why do streams, listeners, followers, and popularity metrics fail to predict algorithmic potential?

Metrics like streams, listeners, followers, popularity indexes, or even algorithmic streams describe outcomes after the fact. They summarize what has already happened, but say very little about how the recommender system is interpreting a release — or how it is likely to act next.

Two tracks can generate similar volumes of algorithmic streams while occupying very different internal positions. One may be in an active expansion phase, with the system testing it across new listener contexts and connected audiences. The other may be largely saturated within a narrow set of listeners, with limited evidence that its algorithmic footprint can extend further.

When these dynamics are collapsed into a single number, critical information is lost. This is why algorithmic performance often feels opaque or inconsistent to DIY artists and label teams alike: surface-level metrics describe visibility, but fail to capture the underlying structures that determine discoverability.

How to judge a track’s algorithmic performance and potential

A more useful way to think about algorithmic performance is to separate scale from efficiency. Algorithmic efficiency describes how effectively a track converts context-specific exposure — even when limited — into satisfaction signals that allow the system to expand recommendations beyond the artist’s existing audience.

Emerging artists often excel in this dimension because early audiences tend to be consistent. Engagement patterns are concentrated, and similarity relationships are easier for the system to interpret. Larger catalogs, by contrast, can suffer from ambiguity and inertia: fragmented audiences, passive consumption driven by legacy exposure, and mixed historical signals that make confident expansion more difficult. An established artist may be stably recommended within their core audience yet struggle to travel beyond it, creating a structural barrier to further discovery.

Once this distinction is clear, the framing of algorithmic success changes. Instead of asking “Why doesn’t this track get Discover Weekly?”, a more productive question becomes:

Where is this track currently positioned within the recommendation system — and how likely is it to be recommended again within those contexts?

For catalogs that generate little to no algorithmic exposure, the answer is often straightforward: the system does not yet have enough confidence to recommend the track anywhere at scale. But addressing that gap is rarely about broad, spray-and-pray tactics or accumulating arbitrary forms of exposure. It is about identifying the earliest, most aligned algorithmic audiences — and reinforcing those signals with tailored marketing actions.

Regardless of artist or catalog scale, effective algorithmic optimization requires moving beyond popularity metrics toward structural analysis: examining how a track is positioned, which listener communities it reaches, how feedback evolves as exposure grows, and how the surrounding ecosystem behaves over time.

More on that next.

In Part II, we build on this foundation to share Music Tomorrow’s algorithmic audit framework, focused on four key questions:

- Where and how is the track or artist positioned by the recommender system?

- How much relevant audience remains to be explored?

- How does current exposure convert into positive listener feedback?

- Does the surrounding scene provide room for further expansion?

Together, these lenses turn opaque algorithmic performance signals into a structured, actionable foundation for algorithmic strategy — clearly showing where further marketing investment is likely to drive sustained algorithmic support, and where opportunities for additional growth have already been exhausted.